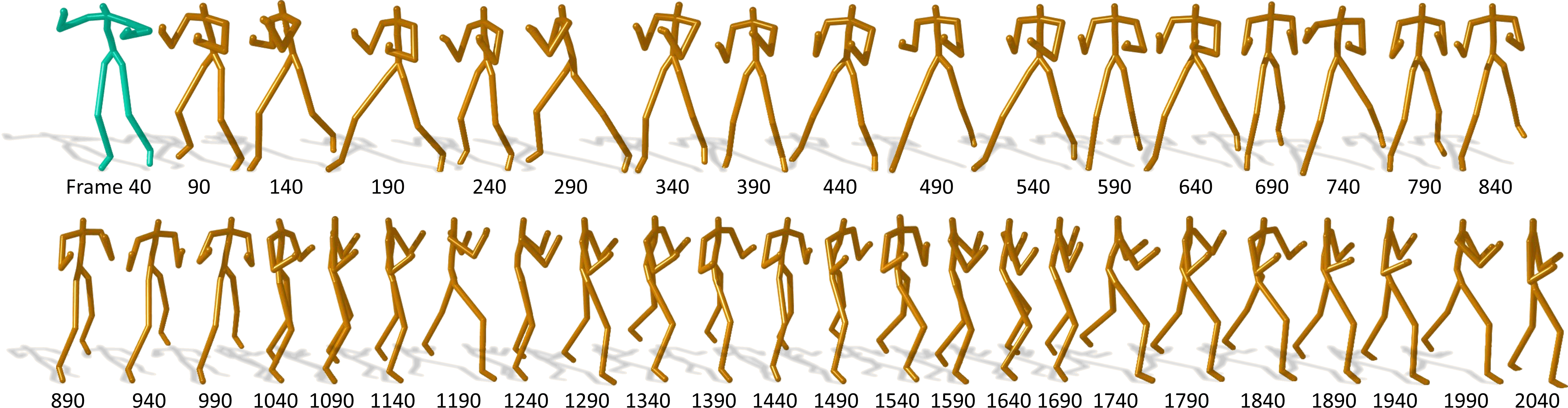

Data-driven modeling of human motions is ubiquitous in computer graphics and computer vision applications, such as synthesizing realistic motions or recognizing actions. Recent research has shown that such problems can be approached by learning a natural motion manifold using deep learning to address the shortcomings of traditional data-driven approaches. However, previous methods can be sub-optimal for two reasons. First, the skeletal information has not been fully utilized for feature extraction. Unlike images, it is difficult to define spatial proximity in skeletal motions in the way that deep networks can be applied. Second, motion is time-series data with strong multi-modal temporal correlations. A frame could be followed by several candidate frames leading to different motions; long-range dependencies exist where a number of frames in the beginning correlate to a number of frames later. Ineffective modeling would either under-estimate the multi-modality and variance, resulting in featureless mean motion or over-estimate them resulting in jittery motions. In this paper, we propose a new deep network to tackle these challenges by creating a natural motion manifold that is versatile for many applications. The network has a new spatial component for feature extraction. It is also equipped with a new batch prediction model that predicts a large number of frames at once, such that long-term temporally-based objective functions can be employed to correctly learn the motion multi-modality and variances. With our system, long-duration motions can be predicted/synthesized using an open-loop setup where the motion retains the dynamics accurately. It can also be used for denoising corrupted motions and synthesizing new motions with given control signals. We demonstrate that our system can create superior results comparing to existing work in multiple applications.

Abstract

Resources

-

, , , .

Spatio-temporal manifold learning for human motions via long-horizon modeling.

IEEE Transactions on Visualization and Computer Graphics (TVCG).

2019

Journal

Paper Video Video BibTex @article{wang2019spatio, author = {He Wang and Edmond SL Ho and Hubert PH Shum and Zhanxing Zhu}, title = {Spatio-temporal manifold learning for human motions via long-horizon modeling}, journal = {IEEE Transactions on Visualization and Computer Graphics (TVCG)}, year = {2019} }

Acknowledgement

The project is partially supported by EPSRC

(Ref:EP/R031193/1). The authors wish to gratefully

acknowledge the support of NVIDIA Corporation with the

donation of the Titan Xp GPU used for this research. The

project was supported in part by the Royal Society (Ref:

IES\R2\181024).