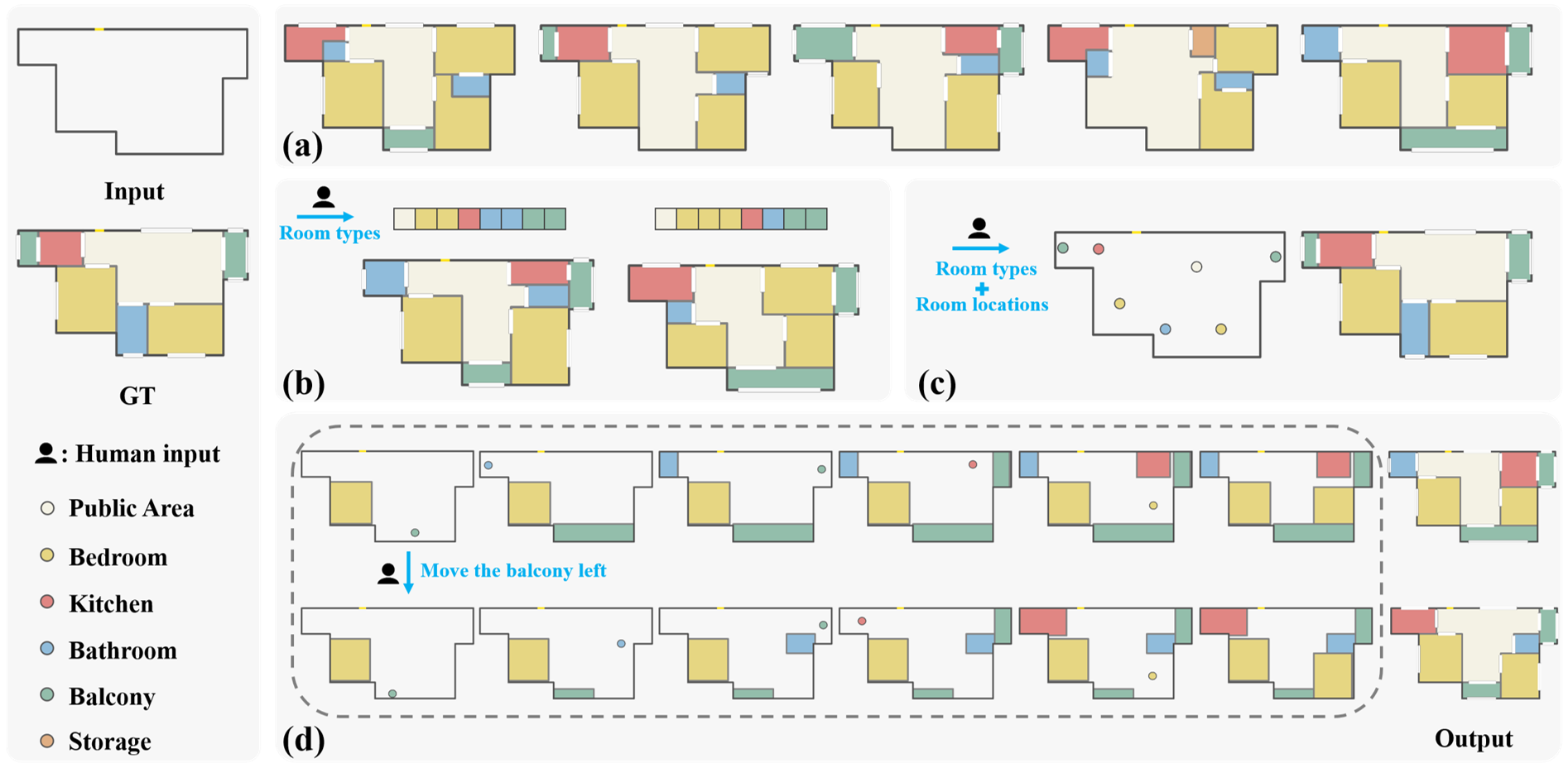

Layout design is ubiquitous in many applications, e.g. architecture/urban planning, etc, which involves a lengthy iterative design process. Recently, deep learning has been leveraged to automatically generate layouts via image generation, showing a huge potential to free designers from laborious routines. While automatic generation can greatly boost productivity, designer input is undoubtedly crucial. An ideal AI-aided design tool should automate repetitive routines, and meanwhile accept human guidance and provide smart/proactive suggestions. However, the capability of involving humans into the loop has been largely ignored in existing methods which are mostly end-to-end approaches. To this end, we propose a new human-in-the-loop generative model, iPLAN, which is capable of automatically generating layouts, but also interacting with designers throughout the whole procedure, enabling humans and AI to co-evolve a sketchy idea gradually into the final design. iPLAN is evaluated on diverse datasets and compared with existing methods. The results show that iPLAN has high fidelity in producing similar layouts to those from human designers, great flexibility in accepting designer inputs and providing design suggestions accordingly, and strong generalizability when facing unseen design tasks and limited training data.

Abstract

Resources

-

, , .

iPLAN: interactive and procedural layout planning.

The IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

2022

Conference

Paper Code BibTex @inproceedings{he2022iplan, author = {Feixiang He and Yanlong Huang and He Wang}, title = {iPLAN: interactive and procedural layout planning}, booktitle = {The IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)}, year = {2022} }

Acknowledgement

We thank Jing Li for her input on the design practice. This project has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No 899739 CrowdDNA and the Marie Skłodowska-Curie grant agreement No 101018395. Feixiang He has been supported by UKRI PhD studentship [EP/R513258/1, 2218576].