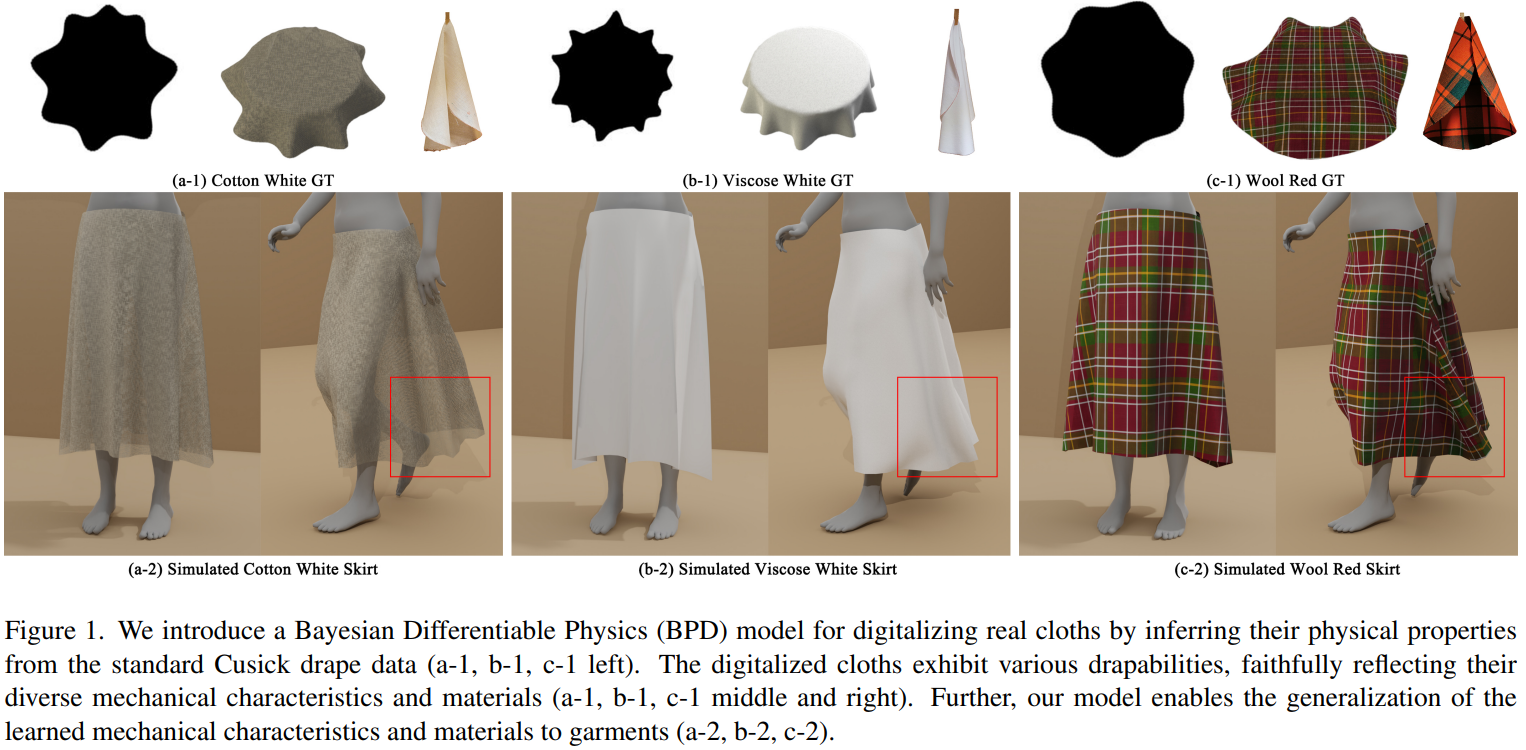

We propose a new method for cloth digitalization. Deviating from existing methods which learn from data captured under relatively casual settings, we propose to learn from data captured in strictly tested measuring protocols, and find plausible physical parameters of the cloths. However, such data is currently absent, so we first propose a new dataset with accurate cloth measurements. Further, the data size is considerably smaller than the ones in current deep learning, due to the nature of the data capture process. To learn from small data, we propose a new Bayesian differentiable cloth model to estimate the complex material heterogeneity of real cloths. It can provide highly accurate digitalization from very limited data samples. Through exhaustive evaluation and comparison, we show our method is accurate in cloth digitalization, efficient in learning from limited data samples, and general in capturing material variations. Code and data are available at https://github.com/realcrane/Bayesian-Differentiable-Physics-for-Cloth-Digitalization

Abstract

Resources

-

, , .

Bayesian Differentiable Physics for Cloth Digitalization.

The IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

2024

Conference

Paper Video Code Podcast BibTex @inproceedings{gong2024bayesian, author = {Deshan Gong and Ningtao Mao and He Wang}, title = {Bayesian Differentiable Physics for Cloth Digitalization}, booktitle = {The IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)}, year = {2024} }

Acknowledgement

The project is partially supported by the Art and Humanities Research Council (AHRC) UK under project FFF (AH/S002812/1).