Action recognition has been heavily employed in many applications such as autonomous vehicles, surveillance, etc, where its robustness is a primary concern. With the recent uprising of adversarial attack which automatically and strategically look to computing data pertubation in order to fool well-trained classifiers, this project looks into the vulnerability of existing classifiers against adversarial attack and how to improve their resistence and robustness.

Abstract

Resources

-

, , , , , , .

TASAR: Transfer-based Attack on Skeletal Action Recognition.

The International Conference on Learning Representations (ICLR).

2025

Conference

Paper Code BibTex @inproceedings{diao2025tasar, author = {Yunfeng Diao and Baiqi Wu and Ruixuan Zhang and Ajian Liu and Xingxing Wei and Meng Wang and He Wang}, title = {TASAR: Transfer-based Attack on Skeletal Action Recognition}, booktitle = {The International Conference on Learning Representations (ICLR)}, year = {2025} } -

, , , , , , .

Understanding the Vulnerability of Skeleton-based Human Activity Recognition via Black-box Attack.

Pattern Recognition.

2024

Journal

Paper BibTex @article{diao2022understanding, author = {Yunfeng Diao* and He Wang* and Tianjia Shao and Yongliang Yang and Kun Zhou and David Hogg and Meng Wang}, title = {Understanding the Vulnerability of Skeleton-based Human Activity Recognition via Black-box Attack}, journal = {Pattern Recognition}, pages = {110564}, year = {2024} } -

, .

Post-train Black-box Defense via Bayesian Boundary Correction.

arxiv.

2024

Preprint

Paper BibTex @misc{wang2023defending, author = {He Wang and Yunfeng Diao}, title = {Post-train Black-box Defense via Bayesian Boundary Correction}, series = {arxiv}, year = {2024} } -

, , , , .

Hard No-Box Adversarial Attack on Skeleton-Based Human Action Recognition with Skeleton-Motion-Informed Gradient.

The Internaitional Conference on Computer Vision (ICCV).

2023

Conference

Paper Supplement Video Code BibTex @inproceedings{lu2023hard, author = {Zhengzhi Lu and He Wang and Ziyi Chang and Guoan Yang and Hubert PH Shum}, title = {Hard No-Box Adversarial Attack on Skeleton-Based Human Action Recognition with Skeleton-Motion-Informed Gradient}, booktitle = {The Internaitional Conference on Computer Vision (ICCV)}, year = {2023} } -

, , , .

Defending Black-box Skeleton-based Human Activity Classifiers.

The AAAI Conference on Artificial Intelligence (AAAI).

2023

Conference

Paper Video Code BibTex @inproceedings{wang2023defending_1, author = {He Wang and Yunfeng Diao and Zichang Tan and Guodong Guo}, title = {Defending Black-box Skeleton-based Human Activity Classifiers}, booktitle = {The AAAI Conference on Artificial Intelligence (AAAI)}, year = {2023} } -

, , , , .

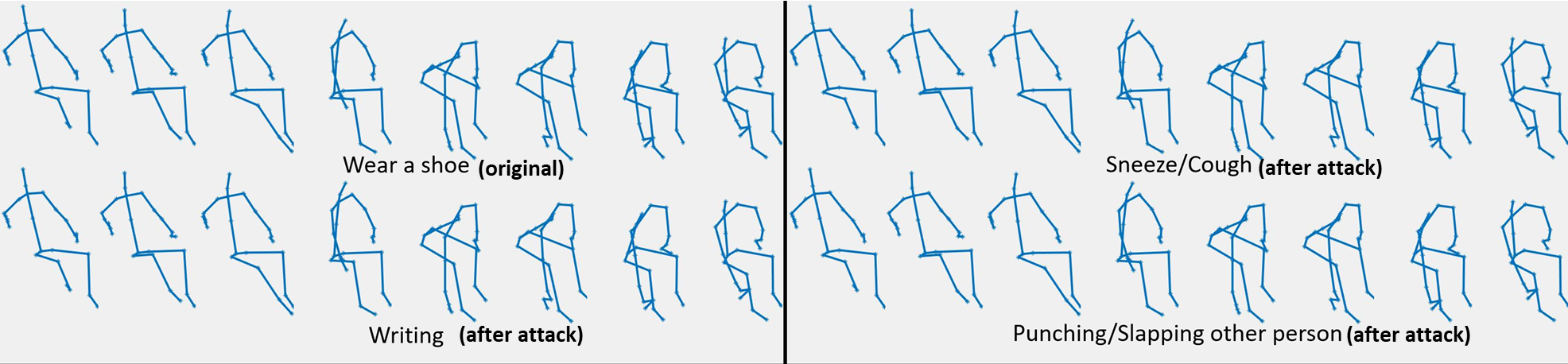

BASAR: Black-box attack on skeletal action recognition.

The IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

2021

Conference

Paper Supplement Video Code Errata BibTex @inproceedings{diao2021basar, author = {Yufeng Diao and Tianjia Shao and Yongliang Yang and Kun Zhou and He Wang}, title = {BASAR: Black-box attack on skeletal action recognition}, booktitle = {The IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)}, pages = {7597--7607}, year = {2021} } -

, , , , , , .

Understanding the robustness of skeleton-based action recognition under adversarial attack.

The IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

2021

Conference

Paper Supplement Video Code BibTex @inproceedings{wang2021understanding, author = {He Wang and Feixiang He and Zhexi Peng and Tianjia Shao and Yongliang Yang and Kun Zhou and David Hogg}, title = {Understanding the robustness of skeleton-based action recognition under adversarial attack}, booktitle = {The IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)}, pages = {14656--14665}, year = {2021} }

Acknowledgement

This project has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No 899739 CrowdDNA, EPSRC (EP/R031193/1), NSF China (No. 61772462, No. U1736217), RCUK grant CAMERA (EP/M023281/1, EP/T014865/1) and the 100 Talents Program of Zhejiang University.